Doug Lea’s Memory Allocator(dlmalloc) Basics

Chapter 4 - Dynamic Memory Management(4.6)

- Book by Robert C. Seacord

Doug Lea’s Memory Allocator (dlmalloc)

A popular memory allocator. High efficiency. Using in Android. ptmalloc is derived from dlmalloc for better multi-threading performances, many linux systems are using it.

The GNU libc allocator can lag behind the current version of dlmalloc by up to a few years.

Sturcture

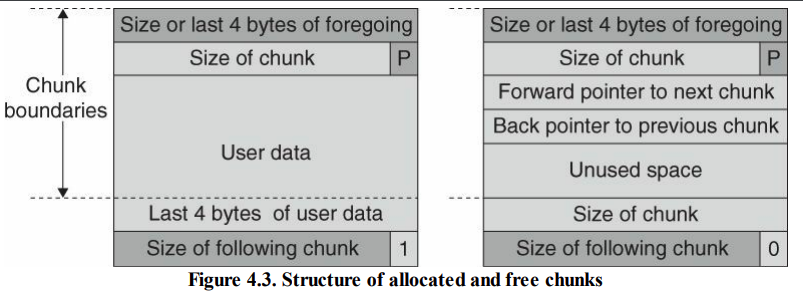

Free chunks are organized into double-linked lists. A free chunk contains forward and backward pointers to the next and previous chunks in the list to which it belongs.

- Sturcture of allocated chunks and free chunks

Apply the memory from kernel

-

< 256kbdmalloc will call brk(), and apply for a memory space, but the space is larger than the program requests, the rest will be kept for future use. The next time it won’t apply memory from the kernel. Forfree(), it won’t free the memory directly to kernel memory, but just mark it. -

> 256kbmmap() and mummap() are called, no more caching.

After applying the memory from kernel

Small Memory Chunk

Small memory chunk (< 248 bytes) will be stored as malloc_chunk:

struct malloc_chunk {

size_t prev_foot; /* Size of previous(for address) chunk (if free). */

size_t head; /* Size and inuse bits. */

struct malloc_chunk* fd; /* double-linked links -- used only if free. */

struct malloc_chunk* bk;

// fd for next malloc_chunk, bk for previous malloc_chunk

};

Small memory chunk size: 16, 24, 32, …, 248 (stored in 30 different linked list.)

Large Memory Chunk

Large memory chunk (> 248 bytes) will stored as malloc_tree_chunk.

struct malloc_tree_chunk {

/* The first four fields must be compatible with malloc_chunk */

size_t prev_foot;

size_t head;

struct malloc_tree_chunk* fd;

struct malloc_tree_chunk* bk;

struct malloc_tree_chunk* child[2]; // 2 children nodes

struct malloc_tree_chunk* parent; // the parent node

bindex_t index; // the index of the tree

};

Large memory chunks will be saved into 32 trees based on the size.

If the program is using the memory chunk, it will be removed from the linked list, so prev_foot, fd and bk are meaningless, can be used by the program.

Malloc State

struct malloc_state {

binmap_t smallmap; // map for smallbins, 0 means empty

mchunkptr smallbins[(NSMALLBINS+1)*2]; // for <30 + 2 <for 0 and 8-byte>> linkedin lists

binmap_t treemap; // map for treebins

tbinptr treebins[NTREEBINS]; // 32 trees

mchunkptr dv; // to store the rest of chunk when dividing into 2

size_t dvsize; // the size of dv

mchunkptr top; // the top memory chunk in the heap, better not allocate this chunk, if it is occupied, dlmalloc cannot free other memory that are not-in-use.

size_t topsize;

char* least_addr;

size_t trim_check;

size_t magic;

size_t footprint;

#if USE_MAX_ALLOWED_FOOTPRINT

size_t max_allowed_footprint;

#endif

size_t max_footprint;

flag_t mflags;

#if USE_LOCKS

MLOCK_T mutex;

#endif /* USE_LOCKS */

msegment seg;

};

static struct malloc_state _gm_;

How malloc() apply for memory

From Android Google Source - dlmalloc.c:

Basic algorithm: If a small request (< 256 bytes minus per-chunk overhead):

- If one exists, use a remainderless chunk in associated smallbin. (Remainderless means that there are too few excess bytes to represent as a chunk.)

- If it is big enough, use the dv chunk, which is normally the chunk adjacent to the one used for the most recent small request.

- If one exists, split the smallest available chunk in a bin, saving remainder in dv.

- If it is big enough, use the top chunk.

- If available, get memory from system and use it

Otherwise, for a large request:

- Find the smallest available binned chunk that fits, and use it if it is better fitting than dv chunk, splitting if necessary.

- If better fitting than any binned chunk, use the dv chunk.

- If it is big enough, use the top chunk.

- If request size >= mmap threshold, try to directly mmap this chunk.

- If available, get memory from system and use it

- The ugly goto’s here ensure that postaction occurs along all paths.

dlmalloc:

void* dlmalloc(size_t bytes) {

if (!PREACTION(gm)) { // gm is struct malloc_state

void* mem;

size_t nb;

// If a small request

if (bytes <= MAX_SMALL_REQUEST) { // 244 bytes

bindex_t idx;

binmap_t smallbits;

// modify the bytes to apply, consider 8-byte alignment and memory in malloc_chunk

nb = (bytes < MIN_REQUEST)? MIN_CHUNK_SIZE : pad_request(bytes);

// based on the size, calculate the index

idx = small_index(nb);

// Check whether the linked list or the adjacent lists got free chunks

smallbits = gm->smallmap >> idx;

/* OPTION 1 - use a remainderless chunk in associated smallbin */

if ((smallbits & 0x3U) != 0) { /* Too few bytes, Remainderless fit to a smallbin. */

mchunkptr b, p;

idx += ~smallbits & 1; /* Uses next bin if idx empty */

b = smallbin_at(gm, idx); // Get the linked list

p = b->fd; // The first free chunk in the list, which is going to be used.

assert(chunksize(p) == small_index2size(idx));

unlink_first_small_chunk(gm, b, p, idx); // remove p from linked list

set_inuse_and_pinuse(gm, p, small_index2size(idx));

mem = chunk2mem(p); // the pointer to the memory chunk to use

check_malloced_chunk(gm, mem, nb);

goto postaction;

}

else if (nb > gm->dvsize) {

if (smallbits != 0) {

/* OPTION 2 - Use chunk in next nonempty smallbin */

// Find an appropriate non-empty linked list in the small bins so that it is the most appropriate to host the request memory value as the differences is smallest.

mchunkptr b, p, r;

size_t rsize;

bindex_t i;

binmap_t leftbits = (smallbits << idx) & left_bits(idx2bit(idx));

binmap_t leastbit = least_bit(leftbits);

compute_bit2idx(leastbit, i);

b = smallbin_at(gm, i); // b is the appropriate linked list

p = b->fd; // first node to allocate

assert(chunksize(p) == small_index2size(i));

unlink_first_small_chunk(gm, b, p, i); // unlink p from b

rsize = small_index2size(i) - nb; // recalculate the free space in the list

// Fit here cannot be remainderless if 4-byte sizes

if (SIZE_T_SIZE != 4 && rsize < MIN_CHUNK_SIZE)

set_inuse_and_pinuse(gm, p, small_index2size(i));

else {

set_size_and_pinuse_of_inuse_chunk(gm, p, nb);

r = chunk_plus_offset(p, nb); // new memory chunk formed without nb

set_size_and_pinuse_of_free_chunk(r, rsize);

replace_dv(gm, r, rsize); // Replace dv with r in the malloc state

}

mem = chunk2mem(p); // pointer to program with CHUNK_OVERHEAD

check_malloced_chunk(gm, mem, nb);

goto postaction;

} // end if (smallbits != 0)

/* OPTION 3.1 - Allocate a small request from tree bins */

else if (gm->treemap != 0 && (mem = tmalloc_small(gm, nb)) != 0) {

check_malloced_chunk(gm, mem, nb);

goto postaction;

} // end else if (gm->treemap != 0 && (mem = tmalloc_small(gm, nb)) != 0)

} // end else if (nb > gm->dvsize)

} // end if (bytes <= MAX_SMALL_REQUEST)

else if (bytes >= MAX_REQUEST) // Edge case - larger than 0xffffffc0, too greedy, deny

nb = MAX_SIZE_T; /* Too big to allocate. Force failure (in sys alloc) */

// #define MAX_SIZE_T (~(size_t)0)

else {

/* OPTION 3.2 - Allocate a large request from tree bins, the amount to apply is larger than MAX_SMALL_REQUEST */

nb = pad_request(bytes); // modify the bytes to request by considering the 8-byte alignment, and the memory space of malloc_tree_chunk

if (gm->treemap != 0 && (mem = tmalloc_large(gm, nb)) != 0) {

check_malloced_chunk(gm, mem, nb);

goto postaction;

}

}

/* OPTION 4 - Get from dv */

if (nb <= gm->dvsize) {

size_t rsize = gm->dvsize - nb; // Remaining size of dv

mchunkptr p = gm->dv;

if (rsize >= MIN_CHUNK_SIZE) { // split dv

mchunkptr r = gm->dv = chunk_plus_offset(p, nb); // new dv after dividing, still can be used

gm->dvsize = rsize;

set_size_and_pinuse_of_free_chunk(r, rsize);

set_size_and_pinuse_of_inuse_chunk(gm, p, nb);

}

else { // exhaust dv

size_t dvs = gm->dvsize;

gm->dvsize = 0;

gm->dv = 0;

set_inuse_and_pinuse(gm, p, dvs);

}

mem = chunk2mem(p); // the pointer to the memory to be collected

check_malloced_chunk(gm, mem, nb);

goto postaction;

}

/* OPTION 5 - Split top chunk */

else if (nb < gm->topsize) {

size_t rsize = gm->topsize -= nb; // remaining size without nb

mchunkptr p = gm->top;

mchunkptr r = gm->top = chunk_plus_offset(p, nb); // new top chunk

r->head = rsize | PINUSE_BIT;

set_size_and_pinuse_of_inuse_chunk(gm, p, nb);

mem = chunk2mem(p); // the pointer to the memory to be collected

check_top_chunk(gm, gm->top);

check_malloced_chunk(gm, mem, nb);

goto postaction;

}

/* OPTION 6 - Apply Memory from the kernel */

mem = sys_alloc(gm, nb);

postaction:

POSTACTION(gm);

return mem;

}

return 0;

}

The unlink() Macro

#define unlink(P, BK, FD) { \

FD = P->fd; \

BK = P->bk; \

FD->bk = BK; \

BK->fd = FD; \

}

Reference

- Security Coding in C and C++. by Robert C. Seacord. Chapter 4.6 ISBN-13: 978-0-321-82213-0

- Android Google Source - dlmalloc.c

- dlmalloc(1)

- dlmalloc(2)

- dlmalloc(3)